We use JSDOM for testing clientside applications in NodeJS. JSDOM lowers the complexity of writing tests for clientside code by omitting the browser and replacing it with a fake one: JSDOM.

However, there’s one JSDOM dependency that concerned me a bit: request, with request-promise-native. Request has been deprecated, and request-promise-native does nasty things using stealthy-require. I’m not sure why anyone would use stealthy-require, but I trust there’s a good reason for to use it.

request has already been a discussed to be replaced with something else in an issue #2792: Replace request with something better. Since there were no pull requests for the issue, I decided to see if I can help out and fix it myself. In this blog post, I’ll describe my process.

Changing code inside a foreign project is commonly quite the challenge. There is usually a lot of code and a lot of things to consider, many you just don’t know about. This is why tests are really important.

For a complex project like JSDOM, without a comprehensive suite of tests, there is no way to be sure your changes might break something. Even with perfect code coverage, there is still no guarantee your changes don’t break something, but you can still be pretty sure your code at least runs in the cases presented by the tests.

I forked & cloned the repository, and created a new branch to start my experimental replacement.

git clone git@github.com:tobyhinloopen/jsdom.git

cd jsdom

git checkout -b 2792-replace-request-with-node-fetch

Now let’s see if there are some tests I can run.

$ npm i

npm ERR! code EUNSUPPORTEDPROTOCOL

npm ERR! Unsupported URL Type "link:": link:./scripts/eslint-plugin

npm ERR! A complete log of this run can be found in:

npm ERR! /Users/hinloopen/.npm/_logs/2020-05-10T15_02_02_981Z-debug.log

Uh… ok. Let’s consult the README first. There is a README.md and Contributing.md. Both might be relevant.

In Contributing.md, it’s already mentioned they’re using yarn. Eager to start, I ignore the rest and use yarn install to install the dependencies.

Let’s run some tests without consulting the readme or contributing guidelines and see if they run.

$ yarn test

# ...

1) "before all" hook: $mochaNoSugar in "{root}"

2) "after all" hook: $mochaNoSugar in "{root}"

0 passing (16ms)

2 failing

1) "before all" hook: $mochaNoSugar in "{root}":

Error: Host entries not present for web platform tests. See https://github.com/web-platform-tests/wpt#running-the-tests

at /Users/hinloopen/Projects/Github/jsdom/test/web-platform-tests/start-wpt-server.js:62:13

at async /Users/hinloopen/Projects/Github/jsdom/test/web-platform-tests/run-tuwpts.js:25:32

# ...

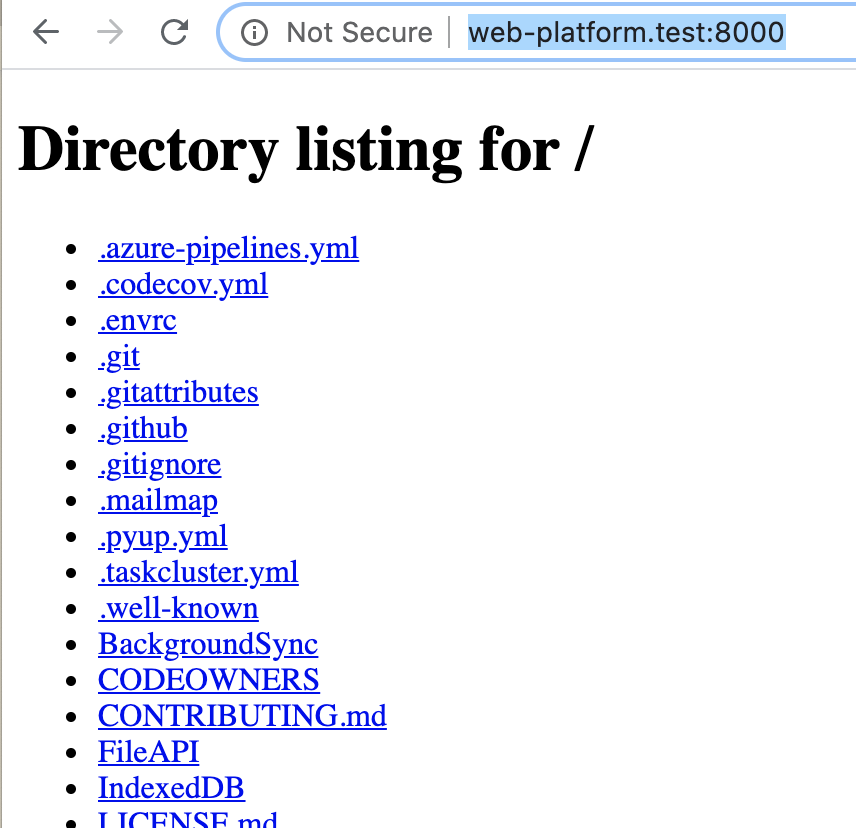

Looks like the tests require more setup. Let’s consult the readme again. The readme refers to The web-platform-tests Project. It looks like this project allows you to run a test suite (that you have to provide yourself in some way) inside a set of browsers. You have to clone the repo and run the code.

I’ll just assume this web-platform-tests project starts some kind of server and you have to open a page in a real browser. Since we’re testing a fake browser (JSDOM), I also assume JSDOM somehow registers to WPT as a real browser, so it can the same tests in JSDOM, as if JSDOM was a browser. Let’s try it out.

$ git clone https://github.com/web-platform-tests/wpt.git

# ...

$ cd wpt

$ ./wpt serve

# ...

CRITICAL:web-platform-tests:Failed to start HTTP server on port 59514; is something already using that port?

CRITICAL:web-platform-tests:Please ensure all the necessary WPT subdomains are mapped to a loopback device in /etc/hosts.

Right. RTFM. I added the setup instructions to .envrc in the WPT project folder.

$ nano .envrc

python -m ensurepip --user

export PATH="$PATH:$HOME/Library/Python/2.7/bin"

pip install --user virtualenv

Additionally:

To get the tests running, you need to set up the test domains in your hosts file. (source)

Let’s do that.

./wpt make-hosts-file | sudo tee -a /etc/hosts

# ...

I think that command fails when a password is asked. I used sudo ls to make my system ask for a password so I can run another sudo command without asking for password. I’m sure there is a better way, but hey, it works.

After that, let’s retry serve:

$ ./wpt serve

# ...

INFO:web-platform-tests:Starting http server on web-platform.test:8000

INFO:web-platform-tests:Starting http server on web-platform.test:59632

INFO:web-platform-tests:Starting https server on web-platform.test:8443

Hey, it works! Let’s open it with a browser!

Well that’s not very interesting at all. Am I done now? Let’s get back to JSDOM and run the tests.

yarn test

# ...

Cool! It’s running tests. Thousands of them. While the tests are running and are heating my macbook, let’s take a peak at our goal: Removing request. Let’s see where it is used.

The first, most naive way to find usages of request is to look for require("request") and require("request-promise-native"):

"use strict";

const request = require("request");

module.exports = cookieJar => {

const jarWrapper = request.jar();

jarWrapper._jar = cookieJar;

return jarWrapper;

};

// ...

const request = require("request");

// ...

const wrapCookieJarForRequest = require("../helpers/wrap-cookie-jar-for-request");

// ...

function doRequest() {

try {

const client = request(options);

if (hasBody && flag.formData) {

const form = client.form();

for (const entry of body) {

form.append(entry.name, entry.value, entry.options);

}

}

return client;

} catch (e) {

const client = new EventEmitter();

process.nextTick(() => client.emit("error", e));

return client;

}

}

/// ...

// ...

const request = require("request");

// ...

/**

* Reads a static fixture file as utf8.

* If running tests from node, the file will be read from the file system

* If running tests using karma, a http request will be performed to retrieve the file using karma's server.

* @param {string} relativePath Relative path within the test directory. For example "jsdom/files/test.html"

*/

exports.readTestFixture = relativePath => {

const useRequest = exports.inBrowserContext();

return exports.nodeResolverPromise(nodeResolver => {

if (useRequest) {

request.get(exports.getTestFixtureUrl(relativePath), { timeout: 5000 }, nodeResolver);

} else {

fs.readFile(path.resolve(__dirname, relativePath), { encoding: "utf8" }, nodeResolver);

}

})

// request passes (error, response, content) to the callback

// we are only interested in the `content`

.then(result => useRequest ? result[1] : result);

};

// ...

const request = require("request-promise-native");

const wrapCookieJarForRequest = require("../../living/helpers/wrap-cookie-jar-for-request");

// ...

fetch(urlString, options = {}) {

const url = parseURL(urlString);

// ...

switch (url.scheme) {

// ...

case "http":

case "https": {

const requestOptions = this._getRequestOptions(options);

return request(urlString, requestOptions);

}

// ...

}

}

// ...

const requestHead = require("request-promise-native").head;

// ...

function pollForServer(url) {

return requestHead(url, { strictSSL: false })

.then(() => {

// ...

}

Looks good! Looking for require('request') yields no results, so I’ll assume there is either a strict merge policy or some kind of linter ensuring double quoted strings are used everywhere.

There might be other ways request or request-promise-native is required. One could have aliased the require to something else. Maybe someone used require("re" + "quest") to mess with me. Maybe someone’s using import somewhere.

Instead of hunting other possible dependencies, let’s try to fix the found dependencies first and re-run the tests.

Running all tests takes ages. However, I’m not sure how to narrow down the number of tests. While trying to figure out how to narrow down the number of tests, the test runner finally finished after 11 minutes.

Reading the contributing guidelines, it is mentioned you can run only JSDOM api tests, or even a set of tests for one specific function. Since the JSDOM API includes a fromUrl function, I’ll assume fromUrl fetches the document using request.

There is a test suite specifically for fromUrl and based on the contributing guidelines, I can run it using yarn test-mocha test/api/from-url.js. Let’s try that.

$ yarn test-mocha test/api/from-url.js

yarn run v1.22.4

$ mocha test/api/from-url.js

API: JSDOM.fromURL()

✓ should return a rejected promise for a bad URL

✓ should return a rejected promise for a 404

✓ should return a rejected promise for a 500

✓ should use the body of 200 responses (54ms)

✓ should use the body of 301 responses

✓ should be able to handle gzipped bodies

✓ should send a HTML-preferring Accept header

✓ should send an Accept-Language: en header

user agent

✓ should use the default user agent as the User-Agent header when none is given

referrer

✓ should reject when passing an invalid absolute URL for referrer

✓ should not send a Referer header when no referrer option is given

✓ should use the supplied referrer option as a Referer header

✓ should canonicalize referrer URLs before using them as a Referer header

✓ should use the redirect source URL as the referrer, overriding a provided one

inferring options from the response

url

✓ should use the URL fetched for a 200

✓ should preserve full request URL

✓ should use the ultimate response URL after a redirect

✓ should preserve fragments when processing redirects

✓ should disallow passing a URL manually

contentType

✓ should use the content type fetched for a 200

✓ should use the ultimate response content type after a redirect

✓ should disallow passing a content type manually

cookie jar integration

✓ should send applicable cookies in a supplied cookie jar

✓ should store cookies set by the server in a supplied cookie jar

✓ should store cookies set by the server in a newly-created cookie jar

25 passing (234ms)

✨ Done in 1.09s.

Phew. That’s better. One second. Let’s first try to break these tests by changing the code that requires request. I’m hoping these tests touches the request-requires at some point.

The test messages also mention cookie jar. I’m hoping this is somehow related to lib/jsdom/living/helpers/wrap-cookie-jar-for-request.js so we can test our changes in that file using this test.

Before we can drop request, we need a replacement. I’ll be using node-fetch. node-fetch is a NodeJS implementation for the browser’s Fetch API. I like the idea of using a library that implements an existing standard because even if you don’t longer like or want to use the library, you can just replace the fetch library with some other fetch implementation.

Since JSDOM also runs in the browser, you can use the browser’s Fetch implementation in the browser. Isn’t that great?

npm install nod– oh right, we’re using YARN now.

$ yarn install node-fetch

error `install` has been replaced with `add` to add new dependencies. Run "yarn add node-fetch" instead.

$ yarn add node-fetch

# ...

✨ Done in 7.80s.

Ok. Now, let’s naively replace request with fetch somewhere. Let’s start with test/util.js, since I’ll assume it’s only used from tests. It is most likely the easiest one to replace.

// ...

const fetch = require("node-fetch");

// ...

exports.readTestFixture = relativePath => {

const useRequest = exports.inBrowserContext();

if (useRequest) {

const url = exports.getTestFixtureUrl(relativePath);

// timeout is a node-fetch specific extention.

fetch(url, { timeout: 5000 }).then((response) => {

if (!response.ok) {

throw new Error(`Unexpected status ${response.status} fetching ${url}`);

}

return response.text();

});

} else {

return exports.nodeResolverPromise(nodeResolver => {

fs.readFile(path.resolve(__dirname, relativePath), { encoding: "utf8" }, nodeResolver);

});

}

};

Looks fine, I suppose. Let’s run the tests.

$ yarn test-mocha test/api/from-url.js

yarn run v1.22.4

$ mocha test/api/from-url.js

# ...

25 passing (234ms)

✨ Done in 1.02s.

All tests are passing, but I don’t know if the tests even touch my changes. Let’s just throw inside the method.

exports.readTestFixture = relativePath => {

const useRequest = exports.inBrowserContext();

if (useRequest) {

throw new Error("???");

// ...

$ yarn test-mocha test/api/from-url.js

yarn run v1.22.4

$ mocha test/api/from-url.js

# ...

25 passing (234ms)

✨ Done in 1.02s.

No thrown errors or failing tests, so it’s still not touching my changes. Let’s run all API tests for good measure. Otherwise, I’ll have to run all tests.

yarn test-api

# ...

419 passing (4s)

✨ Done in 4.56s.

Still no error. Let’s run all tests until something goes bad. While the tests are running forever, let’s CMD+F for readTestFixture.

It looks like all occurences are in test/to-port-to-wpts. CMD+F for to-port-to-wpts yields to this result in the readme:

Ideally we would only use Mocha for testing the JSDOM API itself, from the outside. Unfortunately, a lot of web platform features are still tested using Mocha, instead of web-platform-tests. These are located in the

to-port-to-wptssubdirectory.

So maybe running all mocha tests will trigger my intentional failure. While the main test suite is running, I run the mocha tests using yarn test-mocha, hoping it will run faster.

After a minute, I cancelled the mocha runner since it there seems to be no obvious speed improvement by invoking mocha this way.

What about yarn test-mocha test/to-port-to-wpts/*.js?

$ yarn test-mocha test/to-port-to-wpts/*.js

379 passing (6s)

1 pending

✨ Done in 9.78s.

That runs the tests, but the tests aren’t failing. Confused, I read the jsdoc comment above the function:

/**

* Reads a static fixture file as utf8.

* If running tests from node, the file will be read from the file system

* If running tests using karma, a http request will be performed to retrieve the file using karma's server.

* @param {string} relativePath Relative path within the test directory. For example "jsdom/files/test.html"

*/

exports.readTestFixture = relativePath => {

So my error will only be thrown when running from inside a browser. Well, I don’t need node-fetch inside a browser, do I? I can just use window.fetch, but I won’t get the timeout, since the timeout option isn’t supported on window.fetch.

How did request implement the timeout? I suppose it uses XMLHttpRequest in the background and aborts after a certain amount of time. Let’s ignore that for now and see if we can run the tests inside a browser. The jsdoc mentions karma. Let’s CMD+F karma in the readmes.

The mocha test cases are executed in Chrome using karma. Currently, web platform tests are not executed in the browser yet.

To run all browser tests:

yarn test-browserTo run the karma tests in an iframe:

yarn test-browser-iframeTo run the karma tests in a web worker:

yarn test-browser-worker

Sure. Let’s try that.

$ yarn test-browser

yarn run v1.22.4

$ yarn test-browser-iframe && yarn test-browser-worker

$ karma start test/karma.conf.js

[...]

HeadlessChrome 81.0.4044 (Mac OS X 10.15.4) ERROR

Uncaught Error: ???

at /var/folders/bf/29ljwt3s4dscb7tdd2z5zz0h0000gn/T/test/util.js:162:1 <- /var/folders/bf/29ljwt3s4dscb7tdd2z5zz0h0000gn/T/91efe4665a6210ee2f5edcae3a8f463c.browserify.js:293540:5

Error: ???

at exports.readTestFixture (/var/folders/bf/29ljwt3s4dscb7tdd2z5zz0h0000gn/T/test/util.js:162:1 <- /var/folders/bf/29ljwt3s4dscb7tdd2z5zz0h0000gn/T/91efe4665a6210ee2f5edcae3a8f463c.browserify.js:293540:11)

[...]

My ??? error is thrown! Now, let’s retry without the intentional failure.

$ yarn test-browser

[...]

HeadlessChrome 81.0.4044 (Mac OS X 10.15.4) jsdom/namespaces should set namespaces in HTML documents created by jsdom.env() FAILED

TypeError: Cannot read property 'then' of undefined

[...]

HeadlessChrome 81.0.4044 (Mac OS X 10.15.4) jsdom/namespaces should set namespace-related properties in HTML documents created by innerHTML FAILED

TypeError: Cannot read property 'then' of undefined

[...]

HeadlessChrome 81.0.4044 (Mac OS X 10.15.4) jsdom/namespaces should set namespace-related properties in HTML-SVG documents created by jsdom.env() FAILED

TypeError: Cannot read property 'then' of undefined

[...]

HeadlessChrome 81.0.4044 (Mac OS X 10.15.4) jsdom/namespaces should set namespace-related properties in HTML-SVG documents created by innerHTML FAILED

TypeError: Cannot read property 'then' of undefined

[...]

HeadlessChrome 81.0.4044 (Mac OS X 10.15.4) jsdom/parsing real-world page with < inside a text node (GH-800) FAILED

TypeError: Cannot read property 'then' of undefined

[...]

HeadlessChrome 81.0.4044 (Mac OS X 10.15.4) jsdom/xml should ignore self-closing of tags in html docs FAILED

TypeError: Cannot read property 'then' of undefined

[...]

HeadlessChrome 81.0.4044 (Mac OS X 10.15.4) jsdom/xml should handle self-closing tags properly in xml docs FAILED

TypeError: Cannot read property 'then' of undefined

[...]

HeadlessChrome 81.0.4044 (Mac OS X 10.15.4): Executed 1209 of 2460 (7 FAILED) (skipped 1251) (7.437 secs / 6.708 secs)

TOTAL: 7 FAILED, 1202 SUCCESS

error Command failed with exit code 1.

info Visit https://yarnpkg.com/en/docs/cli/run for documentation about this command.

error Command failed with exit code 1.

info Visit https://yarnpkg.com/en/docs/cli/run for documentation about this command.

Failures! TypeError: Cannot read property 'then' of undefined? Oh… i forgot to return. Oops.

if (useRequest) {

const url = exports.getTestFixtureUrl(relativePath);

// timeout is a node-fetch specific extension

return fetch(url, { timeout: 5000 }).then((response) => {

if (!response.ok) {

throw new Error(`Unexpected status ${response.status} fetching ${url}`);

}

return response.text();

});

}

$ yarn test-browser

[...]

HeadlessChrome 81.0.4044 (Mac OS X 10.15.4): Executed 1209 of 2460 (skipped 1251) SUCCESS (7.497 secs / 6.723 secs)

TOTAL: 1209 SUCCESS

That’s great! Now, since it’s run inside a browser, let’s drop the node-fetch requirement and use the browser’s fetch.

if (exports.inBrowserContext()) {

return fetch(exports.getTestFixtureUrl(relativePath)).then((response) => {

if (!response.ok) {

throw new Error(`Unexpected status ${response.status} fetching ${response.location}`);

}

return response.text();

});

}

$ yarn test-browser

[...]

HeadlessChrome 81.0.4044 (Mac OS X 10.15.4): Executed 1209 of 2460 (skipped 1251) SUCCESS (7.561 secs / 6.812 secs)

TOTAL: 1209 SUCCESS

Great. The best dependency is the one not being used, am I right?

The second request usage by tests is inside test/web-platform-tests/start-wpt-server.js.

// ...

const requestHead = require("request-promise-native").head;

// ...

function pollForServer(url) {

return requestHead(url, { strictSSL: false })

.then(() => {

console.log(`WPT server at ${url} is up!`);

return url;

})

.catch(err => {

console.log(`WPT server at ${url} is not up yet (${err.message}); trying again`);

return new Promise(resolve => {

setTimeout(() => resolve(pollForServer(url)), 500);

});

});

}

Based on the name of the file and some of the error messages, it looks like this code is used to check whether WPT is running. This code is used at the start of the test runner. That should be easy enough to test. Let’s replace request with node-fetch.

The strictSSL option is no part of the Fetch standard, but stack overflow tells me I can use rejectUnauthorized: false instead.

const fetch = require("node-fetch");

const https = require("https");

// ...

const httpsAgent = new https.Agent({

rejectUnauthorized: false,

});

function pollForServer(url) {

const agent = url.startsWith("https")

? new https.Agent({ rejectUnauthorized: false })

: null;

return fetch(url, { method: "HEAD", agent })

.then(({ ok, status }) => {

if (!ok) {

throw new Error(`Unexpected status=${status}`);

}

console.log(`WPT server at ${url} is up!`);

return url;

})

.catch(err => {

console.log(`WPT server at ${url} is not up yet (${err.message}); trying again`);

return new Promise(resolve => {

setTimeout(() => resolve(pollForServer(url)), 500);

});

});

}

I’ve added throw new Error("Foo") (not shown above) to intentionally fail at first. Let’s run the tests and see if they fail. I’ll assume they fail early, so I’ll run all tests.

$ yarn test

[...]

1) "before all" hook: $mochaNoSugar in "{root}"

2) "after all" hook: $mochaNoSugar in "{root}"

0 passing (22ms)

2 failing

1) "before all" hook: $mochaNoSugar in "{root}":

Error: foo

I was right. Let’s kill it and retry without the intentional failure.

$ yarn test

[...]

The tests are running again. I let them run, but I assume my change is fine.

Now that the test utilities are fixed, let’s get our hands dirty on the lib code. There are only 2 files where request is actually invoked. The 3rd is only a helper:

"use strict";

const request = require("request");

module.exports = cookieJar => {

const jarWrapper = request.jar();

jarWrapper._jar = cookieJar;

return jarWrapper;

};

Since this helper is a dependency of the other 2 files, I’ll look at the helper last. Let’s first look at resource-loader.

// ...

const request = require("request-promise-native");

const wrapCookieJarForRequest = require("../../living/helpers/wrap-cookie-jar-for-request");

// ...

_getRequestOptions({ cookieJar, referrer, accept = "*/*" }) {

const requestOptions = {

encoding: null,

gzip: true,

jar: wrapCookieJarForRequest(cookieJar),

strictSSL: this._strictSSL,

proxy: this._proxy,

forever: true,

headers: {

"User-Agent": this._userAgent,

"Accept-Language": "en",

Accept: accept

}

};

if (referrer && !IS_BROWSER) {

requestOptions.headers.referer = referrer;

}

return requestOptions;

}

// ...

fetch(urlString, options = {}) {

const url = parseURL(urlString);

// ...

switch (url.scheme) {

// ...

case "http":

case "https": {

const requestOptions = this._getRequestOptions(options);

return request(urlString, requestOptions);

}

// ...

}

}

Seems easy enough. Let’s convert the request options to fetch options.

encoding: null: This causes request to return a buffer. With node-fetch, we might be able to use response.arrayBuffer() for that.jar: wrapCookieJarForRequest(cookieJar): Somehow cookies are reused this way. The cookieJar variable is converted to a request-compatible cookie jar to allow keeping track of cookies. I don’t know if fetch has features like this. I suppose we can just manually read/write the cookies.strictSSL: this._strictSSL: Just like before, use the HTTPS agent with rejectUnauthorized.proxy: this._proxy: Enables proxy. There is no obvious way to implement this in node-fetch. I also don’t know what’s in this._proxy. I might need to use https-proxy-agent for this.forever: true: Sets keepAlive on the HTTPS agent. Since we’re replacing the agent anyway, we might as well set keepAlive: true for both http and https agents.Let’s make a first attempt to implement resource-loader’s fetch function using fetch instead of request. Because I don’t know how to implement the proxy or cookies, I’ll ignore those for now.

_getFetchOptions({ cookieJar, referrer, accept = "*/*" }) {

/** @type RequestInit */

const fetchOptions = {};

// I don't know what these variables hold exactly - let's log them!

console.log("cookieJar", cookieJar);

console.log("this._proxy", this._proxy);

fetchOptions.headers = {

"User-Agent": this._userAgent,

"Accept-Language": "en",

Accept: accept,

};

if (!IS_BROWSER) {

const httpAgent = new http.Agent({ keepAlive: true });

const httpsAgent = new https.Agent({ keepAlive: true, rejectUnauthorized: this._strictSSL });

fetchOptions.headers.referrer = referrer;

fetchOptions.agent = (url) => url.protocol == 'http:' ? httpAgent : httpsAgent;

}

return fetchOptions;

}

// ...

case "http":

case "https": {

return fetch(urlString, this._getFetchOptions(options))

.then((response) => {

if (!response.ok) {

throw new Error(`Unexpected status=${response.status} for ${urlString}`);

}

return response.arrayBuffer();

})

}

Let’s run the tests and see the mess I’ve created. I get a lot of failures from the tests, as expected. Some are related to cookies. The console.logs look like this:

cookieJar CookieJar { enableLooseMode: true, store: { idx: {} } }

this._proxy undefined

cookieJar CookieJar { enableLooseMode: true, store: { idx: {} } }

this._proxy http://127.0.0.1:51388

So the proxy is just a URL. I’m not sure how to implement the proxy from fetch, if it is even possible. I suppose I can use a proxy agent on the server, but I don’t know an alternative for the browser.

The cookie jar is still a mystery. Since package.json mentions tough-cookie, I’ll just assume the cookie jar is from that library. I’m just going to assume this is also only used server-side, since the browser’s fetch handles cookies automatically.

To add tough-cookie’s cookie-jar to node-fetch, I’m going to use a library called fetch-cookie. fetch-cookie has no other dependencies except for tough-cookie so it can be used independently from Fetch implementations. fetch-cookie is also pretty small: about 50 lines of code.

yarn add fetch-cookie

_getFetchOptions({ cookieJar, referrer, accept = "*/*" }) {

/** @type RequestInit */

const fetchOptions = {};

// I don't know what these variables hold exactly - let's log them!

console.log("cookieJar", cookieJar);

console.log("this._proxy", this._proxy);

fetchOptions.headers = {

"User-Agent": this._userAgent,

"Accept-Language": "en",

"Accept-Encoding": "gzip",

Accept: accept,

};

if (!IS_BROWSER) {

const httpAgent = new http.Agent({ keepAlive: true });

const httpsAgent = new https.Agent({ keepAlive: true, rejectUnauthorized: this._strictSSL });

fetchOptions.headers.referrer = referrer;

fetchOptions.agent = (url) => url.protocol == 'http:' ? httpAgent : httpsAgent;

}

return fetchOptions;

}

// ...

case "http":

case "https": {

const cookieJar = options.cookieJar;

cookieJar.__setCookie = cookieJar.setCookie;

cookieJar.setCookie = (...args) => {

if (args.length === 3) {

args.splice(2, 0, {});

}

if (args.length === 4) {

args[2].ignoreError = true;

}

return cookieJar.__setCookie(...args);

}

const targetFetch = fetchCookie(fetch, cookieJar);

const fetchOptions = this._getFetchOptions(options);

return targetFetch(urlString, fetchOptions)

.then((response) => {

if (!response.ok) {

throw new Error(`Unexpected status=${response.status} for ${urlString}`);

}

return response.arrayBuffer();

});

}

I got a lot of errors when handling the cookies. Turns out, when adding cookies, the request library sets ignoreError on true by default (like a browser would do), but fetch-cookie doesn’t allow you to change the options when setting cookies.

To “fix” this, I hijacked the setCookie function to silence the errors, only to get different errors. I’ll find a proper fix later.

1) Cookie processing

document.cookie

reflects back cookies set from the server while requesting the page:

TypeError: Cannot read property 'headers' of undefined

at /Users/hinloopen/Projects/Github/jsdom/lib/api.js:138:28

at processTicksAndRejections (internal/process/task_queues.js:93:5)

Let’s see what’s inside lib/api.js:

const req = resourceLoaderForInitialRequest.fetch(url, {

accept: "text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8",

cookieJar: options.cookieJar,

referrer: options.referrer

});

return req.then(body => {

const res = req.response;

options = Object.assign(options, {

url: req.href + originalHash,

contentType: res.headers["content-type"],

referrer: req.getHeader("referer")

});

return new JSDOM(body, options);

});

So that’s interesting. Apparently, the promise returned by request-promise not only has a .then method, it also has a .response property containing the response. I didn’t know that, and I don’t see it documented anywhere on the request-promise readme. I would just have used resolveWithFullResponse but whatever.

Let’s see if we can replicate this behavior.

We need to return a promise-like object that has a .then and a .catch (like a promise), but it also needs to have a .response getter, .href getter, and a .getHeader function.

Again, quick and dirty, let’s make it work the easiest way possible.

const cookieJar = options.cookieJar;

cookieJar.__setCookie = cookieJar.setCookie;

cookieJar.setCookie = (...args) => { /* ... */ }

const targetFetch = fetchCookie(fetch, cookieJar);

const fetchOptions = this._getFetchOptions(options);

const fetchResult = targetFetch(urlString, fetchOptions);

let result;

result = {

response: null,

href: urlString,

then: fetchResult.then((response) => {

if (!response.ok) {

throw new Error(`Unexpected status=${response.status} for ${urlString}`);

}

result.response = response;

return response.arrayBuffer();

}).then.bind(fetchResult),

catch: fetchResult.catch.bind(fetchResult),

getHeader(name) {

return fetchOptions.headers[name];

}

};

return result;

The previously failing test now succeeds, but many others still fail. Let’s fix the next one:

1) Cookie processing

should share cookies when a cookie jar is shared:

TypeError: Cannot read property 'innerHTML' of null

at /Users/hinloopen/Projects/Github/jsdom/test/api/cookies.js:288:75

at processTicksAndRejections (internal/process/task_queues.js:93:5)

it("should share cookies when a cookie jar is shared", () => {

const cookieJar = new CookieJar();

return JSDOM.fromURL(testHost + "/TestPath/set-cookie-from-server", { cookieJar }).then(() => {

return JSDOM.fromURL(testHost + "/TestPath/html-get-cookie-header", { cookieJar });

}).then(({ window }) => {

const cookieHeader = window.document.querySelector(".cookie-header").innerHTML;

assertCookies(cookieHeader, [

"Test1=Basic",

"Test2=PathMatch",

"Test6=HttpOnly",

"Test9=Duplicate",

"Test10={\"prop1\":5,\"prop2\":\"value\"}",

"Malformed"

]);

assertCookies(window.document.cookie, [

"Test1=Basic",

"Test2=PathMatch",

"Test9=Duplicate",

"Test10={\"prop1\":5,\"prop2\":\"value\"}",

"Malformed"

]);

});

});

So the .cookie-header element couldn’t be found in the /html-get-cookie-header page. Maybe there is a hint somewhere in the document’s HTML. Let’s log window.document.body.innerHTML using console.log({ html: window.document.body.innerHTML });

{ html: '[object Response]' }

I strongly suspect somewhere inside my new fetch implementation, the HTML body’s toString returns "[object Response]". Let’s check our implementation again.

const fetchOptions = this._getFetchOptions(options);

const fetchPromise = targetFetch(urlString, fetchOptions);

let result;

const then = function(onfulfilled, onrejected) {

return fetchPromise.then((response) => {

if (!response.ok) {

throw new Error(`Unexpected status=${response.status} for ${urlString}`);

}

result.response = response;

return response.arrayBuffer();

}).then(onfulfilled, onrejected);

};

result = {

response: null,

href: urlString,

then,

catch: function(onrejected) { return then(undefined, onrejected) },

getHeader(name) {

return fetchOptions.headers[name];

}

};

return result;

Now we get, yet again, different errors. One includes The "buf" argument must be one of type Buffer, TypedArray, or DataView. Received type object. I suspect this has to do with the ArrayBuffer returned by node-fetch: This is NOT the same as a NodeJS Buffer. Let’s make it a Buffer for NodeJS only:

const then = function(onfulfilled, onrejected) {

return fetchPromise.then((response) => {

if (!response.ok) {

throw new Error(`Unexpected status=${response.status} for ${urlString}`);

}

result.response = response;

return response.arrayBuffer();

})

.then((arrayBuffer) => {

if (typeof Buffer === "undefined") {

return arrayBuffer;

} else {

return Buffer.from(arrayBuffer);

}

})

.then(onfulfilled, onrejected);

};

The next error I encounter is this one:

1) API: resource loading configuration

set to "usable"

canceling requests

should abort a script request (with no events) when stopping the window:

TypeError: openedRequest.abort is not a function

at RequestManager.close (lib/jsdom/browser/resources/request-manager.js:25:21)

at Window.stop (lib/jsdom/browser/Window.js:608:15)

at /Users/hinloopen/Projects/Github/jsdom/test/api/resources.js:559:20

at processTicksAndRejections (internal/process/task_queues.js:93:5)

.abort is not a function. Is openedRequest our fetch result?

/**

* Manage all the request and it is able to abort

* all pending request.

*/

module.exports = class RequestManager {

// ...

close() {

for (const openedRequest of this.openedRequests) {

openedRequest.abort();

}

this.openedRequests = [];

}

// ...

};

Let’s implement .abort, make it do nothing, and see if the error changes.

result = {

response: null,

abort: () => { console.log("TODO ABORT"); },

href: urlString,

then,

catch: function(onrejected) { return then(undefined, onrejected) },

getHeader(name) {

return fetchOptions.headers[name];

}

};

TODO ABORT

Error: Could not load script: "http://127.0.0.1:58978/"

1) API: resource loading configuration

set to "usable"

canceling requests

should abort a script request (with no events) when stopping the window:

The error event must not fire

+ expected - actual

-true

+false

at /Users/hinloopen/Projects/Github/jsdom/test/api/resources.js:920:12

at async Promise.all (index 0)

at async /Users/hinloopen/Projects/Github/jsdom/test/api/resources.js:561:9

Right, time to properly implement .abort. Can we even implement .abort using the browser’s Fetch API? According to MDN, it is experimental technology. Browser support might be incomplete, but I suspect it’s only used in NodeJS anyway.

node-fetch also supports aborting requests, and it is implemented in the same way! It requires an AbortController implementation - abort-controller is suggested.

yarn add abort-controller

const AbortController = require("abort-controller");

// ...

const targetFetch = fetchCookie(fetch, cookieJar);

const fetchOptions = this._getFetchOptions(options);

const abortController = new AbortController();

fetchOptions.signal = abortController.signal;

const fetchPromise = targetFetch(urlString, fetchOptions);

let result;

const then = function(onfulfilled, onrejected) {

return fetchPromise.then((response) => {

if (!response.ok) {

throw new Error(`Unexpected status=${response.status} for ${urlString}`);

}

result.response = response;

return response.arrayBuffer();

})

.then((arrayBuffer) => typeof Buffer === "undefined" ? arrayBuffer : Buffer.from(arrayBuffer))

.then(onfulfilled, onrejected);

};

result = {

response: null,

abort: () => { abortController.abort(); },

href: urlString,

then,

catch: function(onrejected) { return then(undefined, onrejected) },

getHeader(name) {

return fetchOptions.headers[name];

}

};

Using abort still throws an error, causing the test to fail:

Error: Could not load script: "http://127.0.0.1:61567/"

# ...

type: 'aborted',

message: 'The user aborted a request.'

# ...

1) API: resource loading configuration

set to "usable"

canceling requests

should abort a script request (with no events) when stopping the window:

The error event must not fire

+ expected - actual

-true

+false

I’m not sure how request would have handled the abort, but based on this failure, it wasn’t by throwing an error. I can’t find any documentation about it. The source seems to just cancel the request and destroy the response without throwing an error. Maybe the promise just never resolves?

Let’s implement it that way, see if it works.

let aborted = false;

let result;

const then = function(onfulfilled, onrejected) {

return fetchPromise.then((response) => {

if (!response.ok) {

throw new Error(`Unexpected status=${response.status} for ${urlString}`);

}

result.response = response;

return response.arrayBuffer();

})

.then((arrayBuffer) => typeof Buffer === "undefined" ? arrayBuffer : Buffer.from(arrayBuffer))

.then((result) => { if (!aborted) return onfulfilled(result); })

.catch((error) => { if (!aborted) return onrejected(error); });

};

result = {

response: null,

abort: function() {

aborted = true;

abortController.abort();

},

href: urlString,

then,

catch: function(onrejected) {

return then(undefined, onrejected)

},

getHeader(name) {

return fetchOptions.headers[name];

}

};

A lot of green tests this round! Looking good. Still, there are tens of failing tests, some mentioning the proxy. Others mentioning the Referer header.

It looks like I assigned the referrer to a header named Referrer instead of Referer. Let’s fix that and look at the next error.

// inside _getFetchOptions

if (!IS_BROWSER) {

const httpAgent = new http.Agent({ keepAlive: true });

const httpsAgent = new https.Agent({ keepAlive: true, rejectUnauthorized: this._strictSSL });

if (referrer) {

fetchOptions.headers.referer = referrer;

}

fetchOptions.agent = (url) => url.protocol == 'http:' ? httpAgent : httpsAgent;

}

The other two errors are going to be a problem, and are related to redirects:

1) Cookie processing

sent with requests

should gather cookies from redirects (GH-1089):

AssertionError: expected [ 'Test3=Redirect3' ] to deeply equal [ Array(3) ]

+ expected - actual

[

+ "Test1=Redirect1"

+ "Test2=Redirect2"

"Test3=Redirect3"

]

at assertCookies (test/api/cookies.js:383:10)

at /Users/hinloopen/Projects/Github/jsdom/test/api/cookies.js:247:9

at processTicksAndRejections (internal/process/task_queues.js:93:5)

2) API: JSDOM.fromURL()

referrer

should use the redirect source URL as the referrer, overriding a provided one:

AssertionError: expected 'http://example.com/' to equal 'http://127.0.0.1:55863/1'

+ expected - actual

-http://example.com/

+http://127.0.0.1:55863/1

at /Users/hinloopen/Projects/Github/jsdom/test/api/from-url.js:135:14

at processTicksAndRejections (internal/process/task_queues.js:93:5)

fetch uses transparent redirects, and it appears that fetch-cookie doesn’t store cookies around redirects. Reading the documentation, there is actually a fix for that. Let’s apply that fix.

It looks like it is as easy as changing the require to const fetchCookie = require('fetch-cookie/node-fetch');. Let’s do that, and re-run the tests.

1) API: JSDOM.fromURL()

referrer

should use the redirect source URL as the referrer, overriding a provided one:

AssertionError: expected 'http://example.com/' to equal 'http://127.0.0.1:56188/1'

+ expected - actual

-http://example.com/

+http://127.0.0.1:56188/1

The other error is gone. Now let’s see how we fix this one. I can make an educated guess what’s being tested here, but let’s look at the source.

it("should use the redirect source URL as the referrer, overriding a provided one", async () => {

const [requestURL] = await redirectServer("<p>Hello</p>", { "Content-Type": "text/html" });

const dom = await JSDOM.fromURL(requestURL, { referrer: "http://example.com/" });

assert.strictEqual(dom.window.document.referrer, requestURL);

});

So… it’s checking document.referrer. I’ve no idea where this is assigned and I don’t want to find out. Instead, since this test is testing JSDOM.fromURL specifically, let’s see if JSDOM.fromURL assigns the referrer somewhere.

static fromURL(url, options = {}) {

return Promise.resolve().then(() => {

// Remove the hash while sending this through the research loader fetch().

// It gets added back a few lines down when constructing the JSDOM object.

const parsedURL = new URL(url);

const originalHash = parsedURL.hash;

parsedURL.hash = "";

url = parsedURL.href;

options = normalizeFromURLOptions(options);

const resourceLoader = resourcesToResourceLoader(options.resources);

const resourceLoaderForInitialRequest = resourceLoader.constructor === NoOpResourceLoader ?

new ResourceLoader() :

resourceLoader;

const req = resourceLoaderForInitialRequest.fetch(url, {

accept: "text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8",

cookieJar: options.cookieJar,

referrer: options.referrer

});

return req.then(body => {

const res = req.response;

options = Object.assign(options, {

url: req.href + originalHash,

contentType: res.headers["content-type"],

referrer: req.getHeader("referer")

});

return new JSDOM(body, options);

});

});

}

Interesting - it uses this req.getHeader("referer"). req is the object I’m returning, so it actually calls my getHeader function. This function returns the header of the first request.

This is a problem: Because the request was redirected, a new request was started. However, my getHeader fetches the header of the first request, not the last request in the redirect chain.

This is also an issue for req.href, which returns the first request URL, not the last, but I haven’t confirmed a failing test for this problem.

Let’s see if we can peek into the redirect requests. Since fetch-cookie also fixed this problem for assigning cookies, I bet their fix shows how you can peek into redirect requests. Let’s take a look at fetch-cookie/node-fetch

module.exports = function nodeFetchCookieDecorator (nodeFetch, jar) {

const fetchCookie = require('./')(nodeFetch, jar)

return function nodeFetchCookie (url, userOptions = {}) {

const opts = Object.assign({}, userOptions, { redirect: 'manual' })

// Forward identical options to wrapped node-fetch but tell to not handle redirection.

return fetchCookie(url, opts)

.then(res => {

const isRedirect = (res.status === 303 || res.status === 301 || res.status === 302 || res.status === 307)

// Interpret the proprietary "redirect" option in the same way that node-fetch does.

if (isRedirect && userOptions.redirect !== 'manual' && userOptions.follow !== 0) {

const statusOpts = {

// Since the "follow" flag is not relevant for node-fetch in this case,

// we'll hijack it for our internal bookkeeping.

follow: userOptions.follow !== undefined ? userOptions.follow - 1 : undefined

}

if (res.status !== 307) {

statusOpts.method = 'GET'

statusOpts.body = null

}

const redirectOpts = Object.assign({}, userOptions, statusOpts)

return nodeFetchCookie(res.headers.get('location'), redirectOpts)

} else {

return res

}

})

}

}

So basically, their fix is to set the redirect-mode to manual and just call fetch again for every redirect. Because it calls fetch for every redirect, the cookies can be assigned and extracted every request by fetch-cookie.

The easiest way to keep track of all the redirect requests without also interfering with fetch-cookie’s fix is by wrapping the node-fetch instance, keeping track of the last request.

Let’s try that.

_getFetchOptions({ accept = "*/*" }) {

/** @type RequestInit */

const fetchOptions = {};

fetchOptions.headers = {

"User-Agent": this._userAgent,

"Accept-Language": "en",

"Accept-Encoding": "gzip",

Accept: accept,

};

if (!IS_BROWSER) {

const httpAgent = new http.Agent({ keepAlive: true });

const httpsAgent = new https.Agent({ keepAlive: true, rejectUnauthorized: this._strictSSL });

fetchOptions.agent = (url) => url.protocol == 'http:' ? httpAgent : httpsAgent;

}

return fetchOptions;

}

// inside fetch(urlString, options = {})

let lastUrl = options.referrer;

let lastOpts = null;

const myFetch = (url, opts) => {

if (lastUrl && !IS_BROWSER) {

opts.headers.referer = lastUrl;

}

lastUrl = url;

lastOpts = opts;

return fetch(url, opts);

};

const targetFetch = fetchCookie(myFetch, cookieJar);

const fetchOptions = this._getFetchOptions(options);

const abortController = new AbortController();

fetchOptions.signal = abortController.signal;

const fetchPromise = targetFetch(urlString, fetchOptions);

let aborted = false;

let result;

const then = function(onfulfilled, onrejected) {

return fetchPromise.then((response) => {

if (!response.ok) {

throw new Error(`Unexpected status=${response.status} for ${urlString}`);

}

result.response = response;

result.href = lastUrl;

return response.arrayBuffer();

})

.then((arrayBuffer) => typeof Buffer === "undefined" ? arrayBuffer : Buffer.from(arrayBuffer))

.then((result) => { if (!aborted) return onfulfilled(result); })

.catch((error) => {

if (!aborted) {

if (onrejected) {

return onrejected(error);

} else {

throw error;

}

}

});

};

result = {

response: null,

abort: function() {

aborted = true;

abortController.abort();

},

href: null,

then,

catch: function(onrejected) {

return then(undefined, onrejected)

},

getHeader(name) {

return lastOpts.headers[name];

}

};

return result;

So we now have fetch, myFetch and targetFetch. Bad variable names aside, the redirect-related failures seem solved. Let’s run the tests and look at the next errors.

# ...

with a Content-Type header specifying csiso88598e

1) should sniff no-bom-charset-http-equiv-no-quotes.html as ISO-8859-8

2) should sniff no-bom-charset-http-equiv-tis-620.html as ISO-8859-8

3) should sniff no-bom-charset-koi8.html as ISO-8859-8

4) should sniff no-bom-charset-utf-16.html as ISO-8859-8

5) should sniff no-bom-charset-utf-16be.html as ISO-8859-8

6) should sniff no-bom-charset-utf-16le.html as ISO-8859-8

7) should sniff no-bom-no-charset.html as ISO-8859-8

# ...

2) API: encoding detection

fromURL

with a Content-Type header specifying csiso88598e

should sniff no-bom-charset-http-equiv-tis-620.html as ISO-8859-8:

AssertionError: expected 'windows-874' to equal 'ISO-8859-8'

+ expected - actual

-windows-874

+ISO-8859-8

# ...

I have questions. Maybe the test provides some details.

describe("fromURL", { skipIfBrowser: true }, () => {

let server;

let host;

before(() => {

return createServer((req, res) => {

const [, fixture, query] = /^\/([^?]+)(\?.*)?$/.exec(req.url);

const headers = { "Content-Type": "text/html" };

if (query === "?charset=csiso88598e") {

headers["Content-Type"] = "text/html;charset=csiso88598e";

}

res.writeHead(200, headers);

fs.createReadStream(fixturePath(fixture)).pipe(res);

}).then(s => {

server = s;

host = `http://127.0.0.1:${s.address().port}`;

});

});

after(() => server.destroy());

describe("with no Content-Type header given", () => {

for (const encodingFixture of Object.keys(encodingFixtures)) {

const { name, body } = encodingFixtures[encodingFixture];

it(`should sniff ${encodingFixture} as ${name}`, () => {

return JSDOM.fromURL(`${host}/${encodingFixture}`).then(dom => {

assert.strictEqual(dom.window.document.characterSet, name);

assert.strictEqual(dom.window.document.body.textContent, body);

});

});

}

});

describe("with a Content-Type header specifying csiso88598e", () => {

for (const encodingFixture of Object.keys(encodingFixtures)) {

const { nameWhenOverridden, bodyWhenOverridden } = encodingFixtures[encodingFixture];

it(`should sniff ${encodingFixture} as ${nameWhenOverridden}`, () => {

return JSDOM.fromURL(`${host}/${encodingFixture}?charset=csiso88598e`).then(dom => {

assert.strictEqual(dom.window.document.characterSet, nameWhenOverridden);

assert.strictEqual(dom.window.document.contentType, "text/html"); // encoding should be stripped

if (bodyWhenOverridden) {

assert.strictEqual(dom.window.document.body.textContent, bodyWhenOverridden);

}

});

});

}

});

});

Looking at other tests, this csiso88598e content-type is also tested when invoking the constructir directly, and the expectations are similar, and these tests are not failing:

constructor, given binary data

with a contentType option specifying csiso88598e

Buffer

✓ should sniff no-bom-charset-http-equiv-no-quotes.html as ISO-8859-8

✓ should sniff no-bom-charset-http-equiv-tis-620.html as ISO-8859-8

✓ should sniff no-bom-charset-koi8.html as ISO-8859-8

✓ should sniff no-bom-charset-utf-16.html as ISO-8859-8

✓ should sniff no-bom-charset-utf-16be.html as ISO-8859-8

✓ should sniff no-bom-charset-utf-16le.html as ISO-8859-8

✓ should sniff no-bom-no-charset.html as ISO-8859-8

✓ should sniff utf-8-bom.html as UTF-8

✓ should sniff utf-16be-bom.html as UTF-16BE

✓ should sniff utf-16le-bom.html as UTF-16LE

fromURL

with no Content-Type header given

✓ should sniff no-bom-charset-http-equiv-no-quotes.html as ISO-8859-5 (48ms)

✓ should sniff no-bom-charset-http-equiv-tis-620.html as windows-874

✓ should sniff no-bom-charset-koi8.html as KOI8-R

✓ should sniff no-bom-charset-utf-16.html as UTF-8

✓ should sniff no-bom-charset-utf-16be.html as UTF-8

✓ should sniff no-bom-charset-utf-16le.html as UTF-8

✓ should sniff no-bom-no-charset.html as windows-1252

✓ should sniff utf-8-bom.html as UTF-8

✓ should sniff utf-16be-bom.html as UTF-16BE

✓ should sniff utf-16le-bom.html as UTF-16LE

with a Content-Type header specifying csiso88598e

1) should sniff no-bom-charset-http-equiv-no-quotes.html as ISO-8859-8

2) should sniff no-bom-charset-http-equiv-tis-620.html as ISO-8859-8

3) should sniff no-bom-charset-koi8.html as ISO-8859-8

4) should sniff no-bom-charset-utf-16.html as ISO-8859-8

5) should sniff no-bom-charset-utf-16be.html as ISO-8859-8

6) should sniff no-bom-charset-utf-16le.html as ISO-8859-8

7) should sniff no-bom-no-charset.html as ISO-8859-8

Correctly handling this csiso88598e content-type should be done by the constructor. Looking at the source and the tests, the constructor accepts a contentType option that, when equal to csiso88598e, parses the response as ISO-8859-8.

Additionally, the test-server returns a Content-Type: text/html;charset=csiso88598e header. This content-type should be passed to the JSDOM constructor from fromURL:

static fromURL(url, options = {}) {

return Promise.resolve().then(() => {

return req.then(body => {

const res = req.response;

options = Object.assign(options, {

url: req.href + originalHash,

contentType: res.headers["content-type"],

referrer: req.getHeader("referer")

});

return new JSDOM(body, options);

});

});

}

Let’s take a look at res.headers inside one of the failing tests using console.log(res.headers, res.headers["content-type"]);:

Headers {

[Symbol(map)]: [Object: null prototype] {

'content-type': [ 'text/html;charset=csiso88598e' ],

date: [ 'Mon, 29 Jun 2020 20:44:07 GMT' ],

connection: [ 'keep-alive' ],

'transfer-encoding': [ 'chunked' ]

}

} undefined

So the content-type is there, but res.headers["content-type"] is undefined. That’s because res.headers is not a regular object, but instead is a Headers object. Apperently, you cannot use the [] operator to access the Header’s properties. Instead, you should use .get.

For backwards compatibility, let’s change response to have a headers property that’s just a plain JS object.

// inside `then`

const { ok, status } = response;

if (!ok) {

throw new Error(`Unexpected status=${status} for ${urlString}`);

}

const headers = {};

for (const [ key, value ] of response.headers) {

headers[key] = value;

}

result.response = {

status,

headers,

};

result.href = lastUrl;

return response.arrayBuffer();

All encoding-related tests are now green. Let’s see what’s next. There a lot less failures now, so waiting for a failing test now takes minutes.

There are some interesting failures. A common one is a maximum call stack size exceeded error in setCookie:

RangeError: Maximum call stack size exceeded

at Array.values (<anonymous>)

at CookieJar.cookieJar.setCookie [as __setCookie] (/Users/hinloopen/Projects/Github/jsdom/lib/jsdom/browser/resources/resource-loader.js:148:28)

at CookieJar.cookieJar.setCookie [as __setCookie] (/Users/hinloopen/Projects/Github/jsdom/lib/jsdom/browser/resources/resource-loader.js:148:28)

at CookieJar.cookieJar.setCookie [as __setCookie] (/Users/hinloopen/Projects/Github/jsdom/lib/jsdom/browser/resources/resource-loader.js:148:28)

at CookieJar.cookieJar.setCookie [as __setCookie] (/Users/hinloopen/Projects/Github/jsdom/lib/jsdom/browser/resources/resource-loader.js:148:28)

at CookieJar.cookieJar.setCookie [as __setCookie] (/Users/hinloopen/Projects/Github/jsdom/lib/jsdom/browser/resources/resource-loader.js:148:28)

at CookieJar.cookieJar.setCookie [as __setCookie] (/Users/hinloopen/Projects/Github/jsdom/lib/jsdom/browser/resources/resource-loader.js:148:28)

at CookieJar.cookieJar.setCookie [as __setCookie] (/Users/hinloopen/Projects/Github/jsdom/lib/jsdom/browser/resources/resource-loader.js:148:28)

at CookieJar.cookieJar.setCookie [as __setCookie] (/Users/hinloopen/Projects/Github/jsdom/lib/jsdom/browser/resources/resource-loader.js:148:28)

at CookieJar.cookieJar.setCookie [as __setCookie] (/Users/hinloopen/Projects/Github/jsdom/lib/jsdom/browser/resources/resou

Another one is mentioning the proxy, which I have not yet implemented:

1) API: resource loading configuration

With a custom resource loader

should be able to customize the proxy option:

AssertionError: expected 1 to equal 3

+ expected - actual

-1

+3

A timeout:

2) web-platform-tests

cors

credentials-flag.htm:

Error: Error: test harness should not timeout: cors/credentials-flag.htm

And cookies being sent for preflight requests:

31) web-platform-tests

xhr

access-control-preflight-request-must-not-contain-cookie.htm:

Failed in "Preflight request must not contain any cookie header":

assert_unreached: Unexpected error. Reached unreachable code

There might also be some other errors in between, but the logs are full with the setCookie stacktraces, so let’s first fix that one.

It seems that the cookieJar keeps being patched over and over again, which was not my intention. Fixing this should fix the stack-level-too-deep error, and it might also fix the timeout error.

Let’s add a check to ensure the cookieJar is only patched once:

// inside `fetch(urlString, options = {})`

const cookieJar = options.cookieJar;

if (!cookieJar.__setCookie) {

cookieJar.__setCookie = cookieJar.setCookie;

cookieJar.setCookie = (...args) => {

if (args.length === 3) {

args.splice(2, 0, {});

}

if (args.length === 4) {

args[2].ignoreError = true;

}

return cookieJar.__setCookie(...args);

}

}

4917 passing (11m)

563 pending

1 failing

1) API: resource loading configuration

With a custom resource loader

should be able to customize the proxy option:

AssertionError: expected 1 to equal 3

+ expected - actual

-1

+3

at /Users/hinloopen/Projects/Github/jsdom/test/api/resources.js:666:16

at runMicrotasks (<anonymous>)

at processTicksAndRejections (internal/process/task_queues.js:93:5)

4917 passing tests, 1 failing. Only the proxy implementation remains.

It seems that one can replace the node-fetch HTTP(s) agents with a proxy agent using https-proxy-agent as mentioned by jimliang.

Looking at the dependencies of https-proxy-agent, it seems there are two: agent-base and debug.

I feel like this debug dependency should have been optional, but who am I to judge. The agent-base dependency seems sensible.

I also noticed there is a http-proxy-agent variant, without the https. I’m not sure if we need both. I’m hoping the https one just supports both HTTP and HTTPS so I don’t have to install both.

Let’s try https-proxy-agent.

yarn add https-proxy-agent

const HttpsProxyAgent = require("https-proxy-agent");

// _getFetchOptions({ accept = "*/*" }) {

if (!IS_BROWSER) {

const proxyAgent = this._proxy ? new HttpsProxyAgent(this._proxy) : null;

const httpAgent = new http.Agent({ keepAlive: true });

const httpsAgent = new https.Agent({ keepAlive: true, rejectUnauthorized: this._strictSSL });

fetchOptions.agent = (url) => proxyAgent ? proxyAgent : (url.protocol == 'http:' ? httpAgent : httpsAgent);

}

Let’s run the tests, see if this works.

# (with .only on "should be able to customize the proxy option")

0 passing (6s)

1 failing

1) API: resource loading configuration

With a custom resource loader

should be able to customize the proxy option:

Error: Timeout of 2000ms exceeded. For async tests and hooks, ensure "done()" is called; if returning a Promise, ensure it resolves. (/Users/hinloopen/Projects/Github/jsdom/test/index.js)

at listOnTimeout (internal/timers.js:531:17)

at processTimers (internal/timers.js:475:7)

Timeout? That’s not helpful at all. Since the proxy is HTTP, let’s blindly try http-proxy-agent. Additionally, the keepAlive and rejectUnauthorized options are not passed to the proxy agent. Let’s add them. Both proxy agents accept either a URL or an object post, hostname, protocol: The output of url.parse. I’m assuming the remaining options are passed to http(s).Agent.

Let’s combine all my assumptions and see if we get anything other than a timeout. Let’s also increase the timeout, just in case something is just being slow.

yarn add http-proxy-agent

const url = require("url");

const HttpProxyAgent = require("http-proxy-agent");

const HttpsProxyAgent = require("https-proxy-agent");

// _getFetchOptions({ accept = "*/*" }) {

if (!IS_BROWSER) {

const agentOpts = { keepAlive: true, rejectUnauthorized: this._strictSSL };

const proxyOpts = { ...agentOpts, ...(this._proxy ? url.parse(this._proxy) : {}) };

const httpAgent = this._proxy ? new HttpProxyAgent(proxyOpts) : new http.Agent(agentOpts);

const httpsAgent = this._proxy ? new HttpsProxyAgent(proxyOpts) : new https.Agent(agentOpts);

fetchOptions.agent = (url) => url.protocol == 'http:' ? httpAgent : httpsAgent;

}

# npm t -- --timeout 9999

# (with .only on "should be able to customize the proxy option")

this._proxy http://127.0.0.1:63767

this._proxy http://127.0.0.1:63767

✓ should be able to customize the proxy option (80ms)

1 passing (4s)

Success!

Let’s do a minor cleanup to create agents on-demand, and re-run all tests to make sure everything still works.

/**

*

* @param {string} protocol "http:" or "https:"

*/

_getAgent(protocol) {

const isHttps = protocol === "https:";

const agentOpts = { keepAlive: true, rejectUnauthorized: this._strictSSL };

if (this._proxy) {

agentOpts.rejectUnauthorized = this._strictSSL;

const proxyOpts = { ...url.parse(this._proxy), ...agentOpts };

return isHttps ? new HttpsProxyAgent(proxyOpts) : new HttpProxyAgent(proxyOpts);

} else {

return isHttps ? new https.Agent(agentOpts) : new http.Agent(agentOpts);

}

}

// inside _getFetchOptions({ accept = "*/*" }) {

if (!IS_BROWSER) {

fetchOptions.agent = (url) => this._getAgent(url.protocol);

}

All tests are gean. Great. This is the final result. I intent to clean it up after the remaining request dependencies are removed.

/**

*

* @param {string} protocol "http:" or "https:"

*/

_getAgent(protocol) {

const isHttps = protocol === "https:";

const agentOpts = { keepAlive: true, rejectUnauthorized: this._strictSSL };

if (this._proxy) {

agentOpts.rejectUnauthorized = this._strictSSL;

const proxyOpts = { ...url.parse(this._proxy), ...agentOpts };

return isHttps ? new HttpsProxyAgent(proxyOpts) : new HttpProxyAgent(proxyOpts);

} else {

return isHttps ? new https.Agent(agentOpts) : new http.Agent(agentOpts);

}

}

// inside _getFetchOptions({ accept = "*/*" }) {

case "http":

case "https": {

const cookieJar = options.cookieJar;

if (!cookieJar.__setCookie) {

cookieJar.__setCookie = cookieJar.setCookie;

cookieJar.setCookie = (...args) => {

if (args.length === 3) {

args.splice(2, 0, {});

}

if (args.length === 4) {

args[2].ignoreError = true;

}

return cookieJar.__setCookie(...args);

}

}

let lastUrl = options.referrer;

let lastOpts = null;

const myFetch = (url, opts) => {

if (lastUrl && !IS_BROWSER) {

opts.headers.referer = lastUrl;

}

lastUrl = url;

lastOpts = opts;

return fetch(url, opts);

};

const targetFetch = fetchCookie(myFetch, cookieJar);

const fetchOptions = this._getFetchOptions(options);

const abortController = new AbortController();

fetchOptions.signal = abortController.signal;

const fetchPromise = targetFetch(urlString, fetchOptions);

let aborted = false;

let result;

const then = function(onfulfilled, onrejected) {

return fetchPromise.then((response) => {

const { ok, status } = response;

if (!ok) {

throw new Error(`Unexpected status=${status} for ${urlString}`);

}

const headers = {};

for (const [ key, value ] of response.headers) {

headers[key] = value;

}

result.response = {

status,

headers,

};

result.href = lastUrl;

return response.arrayBuffer();

})

.then((arrayBuffer) => typeof Buffer === "undefined" ? arrayBuffer : Buffer.from(arrayBuffer))

.then((result) => { if (!aborted) return onfulfilled(result); })

.catch((error) => {

if (!aborted) {

if (onrejected) {

return onrejected(error);

} else {

throw error;

}

}

});

};

result = {

response: null,

abort: function() {

aborted = true;

abortController.abort();

},

href: null,

then,

catch: function(onrejected) {

return then(undefined, onrejected)

},

getHeader(name) {

return lastOpts.headers[name];

}

};

return result;

}

Because this post has become fairly large, I’ll continue this post in a part 2. To be continued…