I’m working on a small prototype game where you build a Spa Resort. In this game, you’re building saunas and baths for peeps (virtual people) to visit. The idea is to increase their happiness by introducing them to temperature differences.

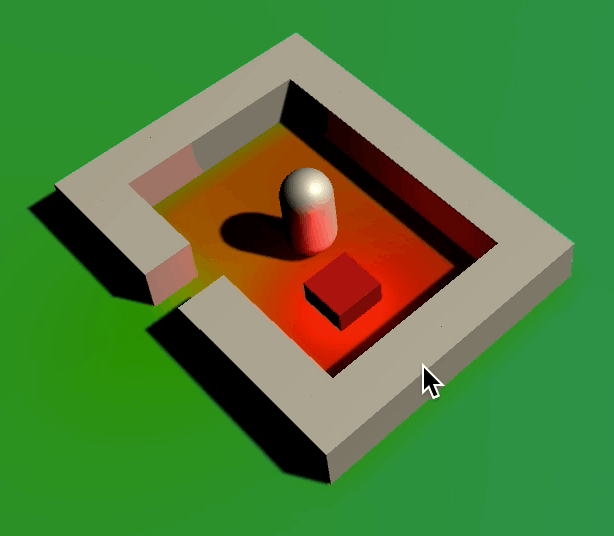

Peep enjoying a sauna

Peep enjoying a sauna

In the current prototype, you can put down walls, heaters and chillers. The walls isolate and prevent peeps from moving through them. The heaters and chillers control temperature.

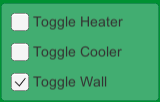

I use WSAD to control the camera, and I use the mouse to put down walls, heaters and chillers. The game even runs on my iPad, but this is just silly.

I'm a computer now

I'm a computer now

Mouse & Keyboard support

My game uses Input.GetMouseButtonDown(0) combined with Input.mousePosition to listen for mouse clicks. When the player clicks somewhere, some action is performed. Using a Unity Toggle Group, the player can choose what action is performed on clicking the mouse.

Since I can place down walls, chillers and heaters using the trackpad or by tapping the screen, it seems that Unity fakes a mouse click when the player taps the screen.

Using the trackpad to move the iPad cursor does not notify my game about mouse movement: Only when tapping the screen, Input.mousePosition gets updated.

I also notice tapping on my UI causes walls to be built below it. I use EventSystem.current.IsPointerOverGameObject() to ignore clicks on the UI, but this seems to be not working for touch events.

Adding the following MonoBehaviour to my game confirms my suspicions.

InputLoggingBehaviour.cs

using UnityEngine;

using UnityEngine.EventSystems;

public class InputLoggingBehaviour : MonoBehaviour

{

void Update()

{

if (Input.GetMouseButtonDown(0))

{

Debug.unityLogger.Log("Input", Input.mousePosition);

Debug.unityLogger.Log("Input", Input.GetMouseButtonDown(0));

Debug.unityLogger.Log("Input", EventSystem.current.IsPointerOverGameObject());

}

}

}

iPad log after tapping screen (only lines matching Input)

Input: (81.0, 63.0, 0.0)

Input: True

Input: False

IsPointerOverGameObject does return True on my macbook, so I think it only works with a real mouse.

For camera movement, I’m using Unity’s Input.GetAxis("Horizontal") and Input.GetAxis("Vertical"). This way I can move the camera using WSAD. This obviously is useless on an iPad, so I have to add some way to move the camera using touch controls.

Touch controls

Before I get started, this is the code I’m starting with.

SurfacePointerBehaviour.cs

using UnityEngine;

using UnityEngine.EventSystems;

public class SurfacePointerBehaviour : MonoBehaviour

{

public Vector2Int? GetPointClicked()

{

if (Input.GetMouseButtonDown(0)

&& !EventSystem.current.IsPointerOverGameObject())

{

return GetSurfacePositionFromScreenPosition(Input.mousePosition);

}

return null;

}

private Ray ray;

private Plane plane = new Plane(Vector3.up, Vector3.zero);

private float distance = 0;

private Vector2Int? GetSurfacePositionFromScreenPosition(Vector3 screenPosition)

{

ray = Camera.main.ScreenPointToRay(screenPosition);

if (plane.Raycast(ray, out distance))

{

var point = ray.GetPoint(distance);

int x = Mathf.FloorToInt(point.x);

int y = Mathf.FloorToInt(point.z);

return new Vector2Int(x, y);

}

return null;

}

}

Basically, if any script is interested in knowing whether the player clicked some point on the surface (aka terrain, ground, floor), it uses GetPointClicked() to get the point where the player clicked on the surface.

If the player clicked the GUI or didn’t click anything last frame, GetPointClicked should return null. I need to patch GetPointClicked to listen for touches as well.

To get started, I first disable Input.simulateMouseWithTouches. This way I can leave all my mouse-related event handlers the way they are and write alternative handlers for touch events.

For now, I’m going to implement it in a way that GetPointClicked returns the point of the first finger touching the screen, and only the first time the finger is pressed on the screen, like GetPointClicked only returns the first time the mouse button is pressed down.

According to the TouchPhase documentation, the initial point of contact has phase = Began, and a finger being lifted from screen has phase = Ended.

SurfacePointerBehaviour.cs

void Start()

{

Input.simulateMouseWithTouches = false;

}

public Vector2Int? GetPointClicked()

{

if (Input.GetMouseButtonDown(0)

&& !EventSystem.current.IsPointerOverGameObject())

{

return GetSurfacePositionFromScreenPosition(Input.mousePosition);

}

else if (Input.touchCount > 0)

{

for (int i = 0; i < Input.touchCount; i++)

{

var touch = Input.GetTouch(i);

if (touch.phase == TouchPhase.Began

&& !EventSystem.current.IsPointerOverGameObject(touch.fingerId))

{

return GetSurfacePositionFromScreenPosition(touch.position);

}

}

}

return null;

}

By passing touch.fingerId to IsPointerOverGameObject, IsPointerOverGameObject correctly returns whether this pointer (the finger) is touching the GUI or not.

Now, the first touch not touching the GUI is returned by GetPointClicked. This already fixes being able to put down walls, heaters and chillers. Panning the camera remains.

Drag to move camera

To pan (move) the camera, I’ll allow the player to drag one finger on screen to move the camera. This already poses a problem: Currently, touching the screen instantly triggers a “click”, while we don’t know if the player intented to tap to interact or drag to move camera.

For this reason, we need to wait before the player either releases or moves their finger. This is easily changed by using TouchPhase.Ended instead of TouchPhase.Began.

var touch = Input.GetTouch(i);

if (touch.phase == TouchPhase.Ended

&& !EventSystem.current.IsPointerOverGameObject(touch.fingerId))

{

return GetSurfacePositionFromScreenPosition(touch.position);

}

Next, I want to move the camera based on the finger’s movement. For each touch, the movement since the last frame is accessible through touch.deltaPosition. Let’s first record the start position.

I want to ignore all touches that began on the UI, so I use IsPointerOverGameObject to ignore these. Because I won’t know what finger will be moving or tapping, I’m recording all fingers in a Dictionary, using the fingerId as a key.

Because I suspect I’ll be writing quite some lines of code handling touch events, I’ll be moving these to a separate method called HandleTouch. This HandleTouch function will be invoked every touch.

Because I don’t want the logic to be invoked again for every script using GetPointClicked, I’m moving the logic to the Update call and storing the results in private variables, one of which is returned by GetPointClicked.

I also noticed Input.mousePosition returns a Vector3 while touch.position returns a Vector2. Cool, I suppose. I changed GetSurfacePositionFromScreenPosition to accept a Vector2 and reduced it’s method name to GetSurfacePosition because I think the old name was too long.

The intermediate result is as follows:

SurfacePointerBehaviour.cs (partial)

using UnityEngine;

using UnityEngine.EventSystems;

using System.Collections.Generic;

public class SurfacePointerBehaviour : MonoBehaviour

{

private readonly Dictionary<int, Vector2> fingerStartPositions = new Dictionary<int, Vector2>();

private readonly Dictionary<int, Vector2> fingerCurrentPositions = new Dictionary<int, Vector2>();

private Vector2Int? mouseClickPosition = null;

private Vector2Int? fingerTapPosition = null;

void Start()

{ /* ... */ }

public Vector2Int? GetPointClicked()

{

if (mouseClickPosition != null)

{

return mouseClickPosition;

}

else if (fingerTapPosition != null)

{

return fingerTapPosition;

}

return null;

}

void Update()

{

UpdateMouseClickPosition();

UpdateFingerTapPosition();

}

private void UpdateMouseClickPosition()

{

if (Input.GetMouseButtonDown(0)

&& !EventSystem.current.IsPointerOverGameObject())

{

mouseClickPosition = GetSurfacePosition(Input.mousePosition);

}

else

{

mouseClickPosition = null;

}

}

private void UpdateFingerTapPosition()

{

fingerTapPosition = null;

if (Input.touchCount > 0)

{

for (int i = 0; i < Input.touchCount; i++)

{

HandleTouch(Input.GetTouch(i));

}

}

}

private void HandleTouch(Touch touch)

{

if (touch.phase == TouchPhase.Began

&& !EventSystem.current.IsPointerOverGameObject(touch.fingerId))

{

fingerStartPositions[touch.fingerId] = touch.position;

fingerCurrentPositions[touch.fingerId] = touch.position;

}

else if (fingerStartPositions.ContainsKey(touch.fingerId))

{

fingerCurrentPositions[touch.fingerId] = touch.position;

if (touch.phase == TouchPhase.Moved)

{

// ???

}

else if (touch.phase == TouchPhase.Ended)

{

fingerStartPositions.Remove(touch.fingerId);

fingerCurrentPositions.Remove(touch.fingerId);

fingerTapPosition = GetSurfacePosition(touch.position);

}

}

}

private Ray ray;

private readonly Plane plane = new Plane(Vector3.up, Vector3.zero);

private float distance = 0;

private Vector2Int? GetSurfacePosition(Vector2 screenPosition)

{ /* */ }

}

So basically I’ve refactored the class so it’s easier to modify. So far, I should have changed nothing functionally. Now let’s distinguish dragging from tapping.

The distinction between a tap and a drag is the movement and duration. If a player moves their finger for more than, lets say, half-a-second and (or?) more than a finger’s width of pixels, I’m pretty sure the player meant to drag something instead of tapping something.

Without thinking about the actual duration or distance (or both), let’s make it configurable and track both. When we decide a player’s finger is dragging and not tapping, I switch some boolean for that finger.

I can already determine the distance of a finger by calculating the distance between a finger’s fingerCurrentPositions and fingerStartPositions. Let’s similarly track time. I only need to track time from the start of the touch, since I can calculate the duration of a touch by comparing the start time with the current time.

I keep track of the start time in a dictionary called fingerStartTimes. When I determined a finger is dragging, I’ll add it to a set of ints called draggingFingers. I’ll keep the tresholds, called dragMinimumDuration and dragMinimumDistance, in public properties so they can be easily tweaked.

Basically, when a finger is moved for at least dragMinimumDistance and dragMinimumDuration, the finger is added to draggingFingers. From this point forward, the first dragging finger is being used to control the camera.

“The first” is also kept in a variable, called primaryDraggingFingerId, and can only be set when no other finger is dragging. This variable is cleared when that finger is released.

This way, any other dragging fingers are ignored until all dragging fingers are released. Taps can still occur.

SurfacePointerBehaviour.cs (partial)

private void HandleTouch(Touch touch)

{

if (touch.phase == TouchPhase.Began

&& !EventSystem.current.IsPointerOverGameObject(touch.fingerId))

{

fingerStartPositions[touch.fingerId] = touch.position;

fingerCurrentPositions[touch.fingerId] = touch.position;

fingerStartTimes[touch.fingerId] = Time.time;

}

else if (fingerStartPositions.ContainsKey(touch.fingerId))

{

fingerCurrentPositions[touch.fingerId] = touch.position;

if (touch.phase == TouchPhase.Moved || touch.phase == TouchPhase.Stationary)

{

if (GetTouchDuration(touch) >= dragMinimumDuration

&& GetTouchDistance(touch) >= dragMinimumDistance)

{

draggingFingers.Add(touch.fingerId);

if (primaryDraggingFingerId == null)

{

primaryDraggingFingerId = touch.fingerId;

}

}

}

else if (touch.phase == TouchPhase.Ended)

{

fingerStartPositions.Remove(touch.fingerId);

fingerCurrentPositions.Remove(touch.fingerId);

fingerStartTimes.Remove(touch.fingerId);

if (draggingFingers.Remove(touch.fingerId))

{

if (primaryDraggingFingerId == touch.fingerId)

{

primaryDraggingFingerId = null;

}

}

else

{

fingerTapPosition = GetSurfacePosition(touch.position);

}

}

}

}

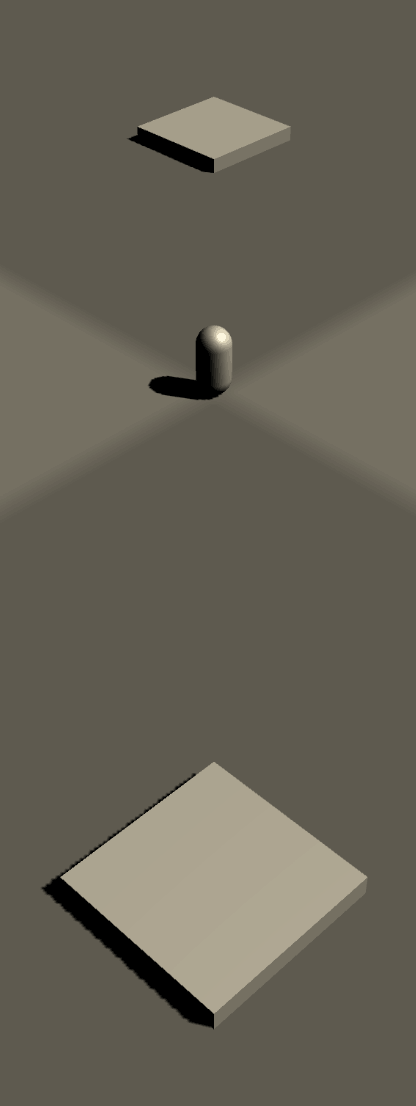

Equal sized objects as seen by a perspective camera.

Equal sized objects as seen by a perspective camera.

I control my camera from a separate behaviour. I only want to pass minimum data between my SurfacePointerBehaviour and my camera behaviour, called TopDownCameraRigBehaviour.

I want to make the dragging feel like you’re dragging the surface. The surface position you started the drag at must remain at your finger. For 2D games, this isn’t hard, because the distance on the surface is always linearly resolves to some distance on screen. However, for a 3D perspective camera, this changes based on the distance of the camera from the surface.

The solution I’m thinking of is to expose the worldspace difference between the current finger position and the desired finger position.

The worldspace distance is the distance in-game. The desired finger position is the point on in-game surface the finger started dragging. The current finger position is where the finger is currently touching the surface.

The idea is that the exposed difference is always at least somewhat in the right direction and amplitude, until the camera is moved to the correct position where the exposed difference would be 0.

I already have both the current and start position of a finger, but it’s the screenspace position. I first have to convert these positions to worldspace. I already have this GetSurfacePosition method, but it returns the worldspace coordinates floored to an int. For my camera dragging use-case, I don’t want floored coordinates.

To fix this, I change GetSurfacePosition to expose a non-floored Vector2, and introduce a GetFlooredSurfacePosition to get a floored Vector2Int.

SurfacePointerBehaviour.cs (partial)

private Vector2Int? GetFlooredSurfacePosition(Vector2 screenPosition)

{

Vector2? surfacePosition = GetSurfacePosition(screenPosition);

if (surfacePosition == null)

{

return null;

}

else

{

return Vector2Int.FloorToInt((Vector2)surfacePosition);

}

}

private Vector2? GetSurfacePosition(Vector2 screenPosition)

{

ray = Camera.main.ScreenPointToRay(screenPosition);

if (plane.Raycast(ray, out distance))

{

var point = ray.GetPoint(distance);

return new Vector2(point.x, point.z);

}

return null;

}

Because the camera might be moving at any point in time, I want to record the position of the finger on the surface in worldspace coordinates at the moment the finger began touching the screen. By the time we detect the finger is dragging, either the camera or finger might already have moved. Therefore, we need to keep the surface in a separate variable, and we need to keep track of it for every finger, since we don’t know yet which finger will be dragging. We need another dictionary. I call this dictionary fingerStartSurfacePositions.

Like fingerStartPositions, it keeps the position of the finger per finger, but instead of keeping the position on screen in screenspace coordinates, I keep the position on surface in worldspace coordinates.

If the primary finger is dragging, I subtract the start surface position of that finger from the current surface position of that finger. The result is target camera movement, stored in dragSurfaceDelta. I’ll expose a getter for that property.

SurfacePointerBehaviour.cs (partial)

public Vector2? DragSurfaceDelta { get; private set; } = null;

// inside UpdateFingerTapPosition

DragSurfaceDelta = null;

if (primaryDraggingFingerId != null)

{

Vector2? currentSurfacePosition = GetSurfacePosition(fingerCurrentPositions[(int)primaryDraggingFingerId]);

if (currentSurfacePosition != null)

{

Vector2 startSurfacePosition = fingerStartSurfacePositions[(int)primaryDraggingFingerId];

dragSurfaceDelta = (Vector2)currentSurfacePosition - startSurfacePosition;

}

}

Now, for my camera rig behaviour:

TopDownCameraRigBehaviour.cs

using UnityEngine;

public class TopDownCameraRigBehaviour : MonoBehaviour

{

public float moveSpeed = 10;

void Update()

{

float horizontal = Input.GetAxis("Horizontal");

float vertical = Input.GetAxis("Vertical");

transform.Translate(new Vector3(horizontal, 0, vertical) * moveSpeed * Time.deltaTime);

}

}

I need a reference to my pointer behaviour first. Then, when the player is dragging, all axis input needs to be ignored. Without testing, I changed my Update function to:

TopDownCameraRigBehaviour.cs (partial)

if (surfacePointerBehaviour.DragSurfaceDelta != null)

{

Vector2 dragDelta = (Vector2)surfacePointerBehaviour.DragSurfaceDelta;

transform.Translate(new Vector3(dragDelta.x, 0, dragDelta.y));

}

else

{

float horizontal = Input.GetAxis("Horizontal");

float vertical = Input.GetAxis("Vertical");

transform.Translate(new Vector3(horizontal, 0, vertical) * moveSpeed * Time.deltaTime);

}

Now I link the pointer behavior to the camera behaviour from the Unity editor and start my project, expecting the worst, hoping for the best.

Well, at least dragging is detected properly.

I can only assume what’s going wrong at this point: my DragSurfaceDelta variable doesn’t point to the right direction, and instead points away from the position the camera needs to be moved to.

This might be partially due to the fact that my camera isn’t aligned with the surface, but instead is angled 45 degrees. To fix this, I need to consider the angle between the camera’s direction relative to the surface, and rotate my DragSurfaceDelta by that angle.

I think I need to start by getting the global worldspace rotation of both the surface and the camera. According to the Transform docs, transform.rotation returns the global rotation, not the local rotation relative to the parent (which is called transform.localRotation).

I don’t need the surface rotation, since I’m actually using an infinite plane generated at runtime without any rotation or translation to get the surface coordinates (GetSurfacePosition raycasts to a new Plane(Vector3.up, Vector3.zero)). I therefore know the surface isn’t rotated - or at least the coordinates returned by GetSurfacePosition aren’t rotated. Because the surface isn’t rotated, only the camera’s rotation needs to be considered.

I first have to compensate for the camera’s rotation, then I can move the camera, and finally undo the camera rotation compensation.

TopDownCameraRigBehaviour.cs (partial)

Vector2 dragDelta = (Vector2)surfacePointerBehaviour.DragSurfaceDelta;

float angle = Camera.main.transform.rotation.eulerAngles.y;

transform.Rotate(Vector3.up, -angle);

transform.Translate(-new Vector3(dragDelta.x, 0, dragDelta.y));

transform.Rotate(Vector3.up, angle);

This works surprisingly great and I’m really happy with the result. There was only the problem where there was a horrible lag of about half a second, but this was easily solved by removing the 0.5 seconds threshold.

To finish it off, I did some refactoring to get rid of the dictionaries and instead use a class to keep track of individual fingers. The final SurfacePointerBehaviour is as follows:

TopDownCameraRigBehaviour.cs

using UnityEngine;

using UnityEngine.EventSystems;

using System.Collections.Generic;

public class SurfacePointerBehaviour : MonoBehaviour

{

private class Finger

{

public Vector2 startSurfacePosition;

public Vector2 currentSurfacePosition;

public Vector2 startScreenPosition;

public Vector2 currentScreenPosition;

public bool dragging;

public float ScreenDistance => Vector2.Distance(startScreenPosition, currentScreenPosition);

}

private readonly Dictionary<int, Finger> fingers = new Dictionary<int, Finger>();

private Finger primaryFinger = null;

private Vector2Int? mouseClickPosition = null;

private Vector2Int? fingerTapPosition = null;

public Vector2? DragSurfaceDelta { get; private set; } = null;

public float dragMinimumDistance = 20f;

void Start()

{

Input.simulateMouseWithTouches = false;

}

public Vector2Int? GetPointClicked()

{

return mouseClickPosition ?? fingerTapPosition;

}

void Update()

{

UpdateMouseClickPosition();

UpdateFingerTapPosition();

}

private void UpdateMouseClickPosition()

{

if (Input.GetMouseButtonDown(0)

&& !EventSystem.current.IsPointerOverGameObject())

{

mouseClickPosition = GetFlooredSurfacePosition(Input.mousePosition);

}

else

{

mouseClickPosition = null;

}

}

private void UpdateFingerTapPosition()

{

fingerTapPosition = null;

DragSurfaceDelta = null;

if (Input.touchCount > 0)

{

for (int i = 0; i < Input.touchCount; i++)

{

HandleTouch(Input.GetTouch(i));

}

}

if (primaryFinger != null)

{

DragSurfaceDelta = primaryFinger.currentSurfacePosition - primaryFinger.startSurfacePosition;

}

}

private void HandleTouch(Touch touch)

{

Vector2 screenPosition = touch.position;

Vector2? surfacePosition = GetSurfacePosition(screenPosition);

if (touch.phase == TouchPhase.Began

&& !EventSystem.current.IsPointerOverGameObject(touch.fingerId))

{

if (surfacePosition != null)

{

Finger finger = new Finger

{

currentSurfacePosition = (Vector2)surfacePosition,

startSurfacePosition = (Vector2)surfacePosition,

currentScreenPosition = screenPosition,

startScreenPosition = screenPosition,

dragging = false

};

fingers[touch.fingerId] = finger;

}

}

else if (fingers.ContainsKey(touch.fingerId))

{

Finger finger = fingers[touch.fingerId];

finger.currentScreenPosition = screenPosition;

if (surfacePosition != null)

{

finger.currentSurfacePosition = (Vector2)surfacePosition;

}

if (touch.phase == TouchPhase.Moved)

{

if (finger.ScreenDistance >= dragMinimumDistance)

{

finger.dragging = true;

if (primaryFinger == null)

{

primaryFinger = finger;

}

}

}

else if (touch.phase == TouchPhase.Ended)

{

if (primaryFinger == finger)

{

primaryFinger = null;

}

else if (!finger.dragging)

{

fingerTapPosition = Vector2Int.FloorToInt(finger.startSurfacePosition);

}

fingers.Remove(touch.fingerId);

}

}

}

private Ray ray;

private readonly Plane plane = new Plane(Vector3.up, Vector3.zero);

private float distance = 0;

private Vector2Int? GetFlooredSurfacePosition(Vector2 screenPosition)

{

Vector2? surfacePosition = GetSurfacePosition(screenPosition);

if (surfacePosition == null)

{

return null;

}

else

{

return Vector2Int.FloorToInt((Vector2)surfacePosition);

}

}

private Vector2? GetSurfacePosition(Vector2 screenPosition)

{

ray = Camera.main.ScreenPointToRay(screenPosition);

if (plane.Raycast(ray, out distance))

{

var point = ray.GetPoint(distance);

return new Vector2(point.x, point.z);

}

return null;

}

}